Runtimeerror: No GPU Found. A GPU Is Needed For Quantization. – Here’s How to Fix It!

When I first encountered the “RuntimeError: no GPU found. A GPU is needed for quantization,” I was frustrated because my project came to a halt. After hours of troubleshooting, I discovered my GPU drivers were outdated. Updating the drivers and configuring CUDA properly finally resolved the issue, allowing me to continue my work seamlessly.

“RuntimeError: no GPU found. A GPU is needed for quantization.” means your software can’t detect a GPU, which is essential for speeding up the quantization process. This can happen due to missing GPU drivers, incorrect CUDA installation, or configuration issues. Check and update your drivers and CUDA setup to resolve this.

Are you frustrated by the “RuntimeError: no GPU found. A GPU is needed for quantization” message? Don’t worry! In this article, we’ll break down what this error means and guide you through simple steps to fix it.

Understanding “Runtimeerror: No GPU Found”:

“RuntimeError: no GPU found” means that the software you’re using cannot detect a Graphics Processing Unit (GPU) on your computer, which is necessary for the task you are trying to perform.

GPUs are powerful processors that handle complex calculations quickly, which is especially important for tasks like machine learning and quantization. When this error appears, it indicates that the software expects to use a GPU to speed up these processes but cannot find one.

This could be due to missing or misconfigured GPU drivers, CUDA not being installed or set up properly, the GPU being disabled, or the environment not having access to the GPU.

Why Does “Runtimeerror: No GPU Found” Happen?

“RuntimeError: no GPU found” happens because your computer or software cannot detect a GPU, which is necessary for certain tasks like quantization. This can be due to missing or outdated GPU drivers, incorrect CUDA installation, the GPU being disabled in BIOS settings, or improper configuration of your software environment.

Also Read: Is 60 Degrees Celsius Hot For A Gpu – Safe GPU Temperature!

How To Fix “Runtimeerror: No GPU Found”?

1. Check CUDA Installation:

- Open your terminal and type `nvcc –version`.

- If not installed, download and install CUDA from the NVIDIA website.

2. Verify GPU Drivers:

- Open your terminal and type `nvidia-smi`.

- If it doesn’t show GPU details, download and install the correct drivers from the NVIDIA Driver Downloads..

3. Enable GPU in BIOS:

- Restart your computer and enter the BIOS setup (usually by pressing a key like F2, F10, DEL, or ESC during startup).

- Ensure the GPU is enabled in the BIOS settings.

4. Configure Virtual Environment:

If using a virtual environment, ensure CUDA is accessible. For example, in Anaconda, install the CUDA toolkit:

conda install cudatoolkit5. Provide GPU Access In Docker:

If using Docker, run the container with GPU access:

docker run --gpus all -it <image-name>6. Install GPU-Compatible Frameworks:

For PyTorch, install the GPU version:

pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu113For TensorFlow, install the GPU version:

pip install tensorflow-gpu7. Set Environment Variables:

Some frameworks require specific environment variables. For TensorFlow, you may need to set:

export CUDA_VISIBLE_DEVICES=0Also Read: Can You Use Amd Gpu With Intel Cpu – Enjoy The Performance!

Why Do I Need A GPU For Quantization?

You need a GPU for quantization because it significantly speeds up the process. Quantization reduces the precision of a model’s parameters, making the model faster and more efficient in terms of memory usage.

GPUs are designed to handle many calculations simultaneously, which makes them ideal for this task. Using a GPU ensures that quantization is performed quickly and efficiently, enabling smoother and faster machine learning operations.

Also Read: Cuda Setup Failed Despite Gpu Being Available – Explore For All Details!

Running Pytorch Quantized Model On Cuda GPU:

Running a PyTorch quantized model on a CUDA GPU involves several steps to ensure that your model is optimized for performance and utilizes the GPU efficiently. Here’s a simple, step-by-step guide:

1. Install Necessary Packages:

First, make sure you have the necessary packages installed, including the CUDA version of PyTorch.

pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu1132. Load Your Model:

Load your pre-trained model. For example, let’s use a ResNet18 model.

import torch

import torchvision.models as models

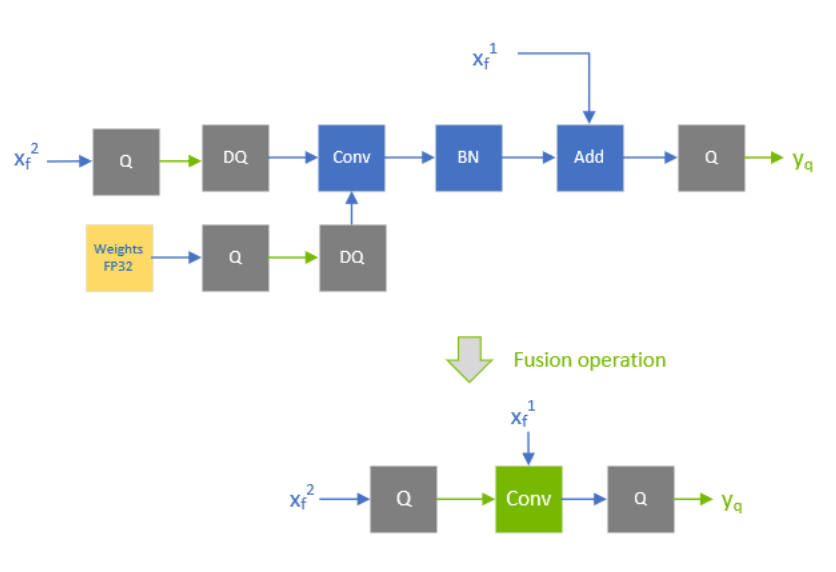

model = models.resnet18(pretrained=True)3. Quantize the Model:

Quantization reduces the precision of the model’s weights and activations. Prepare the model for quantization and then quantize it.

# Define the quantization configuration

model.qconfig = torch.quantization.get_default_qconfig('fbgemm')

# Prepare the model for quantization

model_fp32_prepared = torch.quantization.prepare(model)

# Calibrate the model (run a few batches of data through the model)

# Assuming you have some calibration data loader

for batch in calibration_data_loader:

model_fp32_prepared(batch)

# Convert the prepared model to a quantized version

model_int8 = torch.quantization.convert(model_fp32_prepared)4. Move The Model To Cuda:

Move the quantized model to the GPU.

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model_int8.to(device)5. Running Inference:

Use the quantized model for inference on the GPU. Make sure your input data is also moved to the GPU.

# Dummy input tensor

input_tensor = torch.randn(1, 3, 224, 224).to(device)

# Run the quantized model on the GPU

output = model_int8(input_tensor)

# Print the output

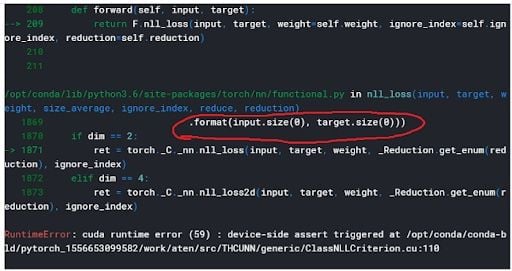

print(output)Runtimeerror In Torch.Quantization.Convert After Qat On GPU:

A “RuntimeError in torch.quantization.convert after QAT on GPU” typically happens when you try to convert a model to a quantized version using Quantization-Aware Training (QAT) on a GPU. This error occurs because QAT involves some operations that are not compatible with GPU execution.

To resolve this, you need to perform the conversion on the CPU. First, move the model from the GPU to the CPU, convert it to the quantized version, and then, if needed, move the quantized model back to the GPU for inference.

How Can I Check If My GPU Is Detected?

To check if your GPU is detected, you can use a simple command in your terminal or command prompt. Open your terminal and type `nvidia-smi` and press Enter. This command will display detailed information about your NVIDIA GPU, including its status, memory usage, and driver version.

If your GPU is detected, you’ll see this information. If not, it may indicate that your GPU drivers are not installed correctly or your GPU is not properly connected.

Reinstalling Cuda Help Resolve “Runtimeerror: No GPU Found. A Gpu Is Needed For Quantization.”:

Reinstalling CUDA can help resolve the “RuntimeError: no GPU found. A GPU is needed for quantization” because it ensures that the necessary software components are correctly installed and configured for your GPU. If CUDA was not installed properly or has become corrupted, reinstalling it can fix these issues.

Make sure to download the correct version for your system from the NVIDIA website and follow the installation instructions carefully. This can restore the connection between your software and GPU, allowing the quantization process to utilize the GPU properly.

“Runtimeerror: No Gpu Found. A GPU Is Needed For Quantization.” Occur On A Laptop:

The “RuntimeError: no GPU found. A GPU is needed for quantization” can occur on a laptop if the laptop’s GPU is not detected or enabled. This can happen if the GPU drivers are missing or outdated, the GPU is disabled in the BIOS or power settings, or if CUDA is not installed or configured correctly. To fix this, ensure that your laptop’s GPU drivers and CUDA are properly installed and that the GPU is enabled in the BIOS and power settings.

Fix “Runtimeerror: No Gpu Found. A GPU Is Needed For Quantization.” On A Cloud Vm:

To fix “RuntimeError: no GPU found. A GPU is needed for quantization” on a cloud VM, first ensure that the VM is configured with GPU support. Install the necessary GPU drivers by following your cloud provider’s instructions. Next, install CUDA and verify its installation with `nvcc –version`.

Finally, configure your machine learning environment (like PyTorch or TensorFlow) to use the GPU. This setup ensures your cloud VM can detect and utilize the GPU for quantization tasks.

Troubleshoot GPU Detection Issues:

To troubleshoot GPU detection issues, start by ensuring your GPU drivers are installed and up-to-date. Check the GPU status using `nvidia-smi` in your terminal. Verify that CUDA is installed correctly with `nvcc –version`.

Make sure the GPU is enabled in your BIOS settings. If using a virtual environment or Docker, ensure they have access to the GPU. These steps help identify and fix common problems preventing your system from detecting the GPU.

Also Read: Can You Use Amd Gpu With Intel Cpu – Enjoy The Performance!

How Can I Check If My Code Is Using The GPU In Pytorch?

To check if your code is using the GPU in PyTorch, you can add a simple line of code to your script. After moving your model to the GPU with `model.to(‘cuda’)`, and after moving your data to the GPU with `input_tensor.to(‘cuda’)`, you can add:

print(torch.cuda.is_available())If this command prints `True`, your code is set up to use the GPU. Additionally, you can use:

print(next(model.parameters()).device)to confirm that your model’s parameters are on the GPU. If the output shows `cuda:0`, then your model and data are correctly utilizing the GPU.

FAQs:

1. What Does Cuda Mean?

CUDA stands for Compute Unified Device Architecture and is a platform created by NVIDIA that allows software to use your GPU for fast computing tasks like machine learning and graphics processing.

2. How Do I Know If Cuda Is Installed?

To know if CUDA is installed, open your terminal and type `nvcc –version`. If you see version information, CUDA is installed.

3. What Are GPU Drivers?

GPU drivers are software that allows your operating system to communicate with your GPU.

4. Can Outdated Software Cause “Runtimeerror: No Gpu Found. A GPU Is Needed For Quantization.”?

Yes, outdated software can cause the “RuntimeError: no GPU found. A GPU is needed for quantization” error. Make sure you have the latest versions of CUDA, GPU drivers, and your machine learning frameworks to resolve this issue.

5. Can Multiple GPUs Cause “Runtimeerror: No Gpu Found. A GPU Is Needed For Quantization.”?

Yes, multiple GPUs can cause “RuntimeError: no GPU found. A GPU is needed for quantization.” if the software isn’t configured to use the correct GPU. Ensure the right GPU is specified or accessible in your settings.

6. Why Is My GPU Not Detected In A Docker Container?

Your GPU might not be detected in a Docker container if the container isn’t started with GPU access. Make sure to run the container with the `–gpus all` flag like this:

docker run --gpus all -it <image-name>7. How Do I Give Docker Access To My GPU?

To give Docker access to your GPU, run the container with the `–gpus all` flag:

docker run --gpus all -it <image-name>This command allows Docker to use all available GPUs on your system.

8. How Do I Install The GPU Version Of Tensorflow?

To install the GPU version of TensorFlow, use the following command:

pip install tensorflow-gpu9. What Environment Variables Should I Set For Tensorflow?

For TensorFlow, set the `CUDA_VISIBLE_DEVICES` environment variable to specify which GPU devices TensorFlow can use. For example:

export CUDA_VISIBLE_DEVICES=0 # Sets TensorFlow to use GPU 010. What Should I Do If My GPU Is Not Available In Pytorch?

If your GPU is not available in PyTorch, check if CUDA and GPU drivers are properly installed and up-to-date. Also, make sure the GPU is enabled and accessible in your environment settings.

Final Words:

The “RuntimeError: no GPU found. A GPU is needed for quantization” error indicates that your setup lacks GPU access for efficient quantization. By installing correct drivers, configuring CUDA, and ensuring the GPU is enabled, you can resolve this issue and speed up your machine learning tasks.

Related Searches:

- Is Beamng Cpu Or Gpu Intensive – Conquer Both!

- Runtimeerror: Gpu Is Required To Quantize Or Run Quantize Model. – Read Our Guide!

- Gpu Only Works In Second Slot – Isolate The Problem!

- Red Light On Gpu When Pc Is Off – Don’t Panic, Check This Now!

print(output)

James George is a GPU expert with 5 years of experience in GPU repair. On Techy Cores, he shares practical tips, guides, and troubleshooting advice to help you keep your GPU in top shape. Whether you’re a beginner or a seasoned tech enthusiast, James’s expertise will help you understand and fix your GPU issues easily.