Runtimeerror: Gpu Is Required To Quantize Or Run Quantize Model. – Read Our Guide!

While working on my deep learning project, I hit a roadblock with the error ‘RuntimeError: GPU is required to quantize or run quantize model.’ It was frustrating to realize my laptop’s CPU couldn’t handle the task, but upgrading to a GPU setup made all the difference.

The “RuntimeError: GPU is required to quantize or run quantize model.” error means your task needs a GPU for processing. Quantization and running quantized models are complex tasks that GPUs handle better than CPUs. To fix this, make sure you have a GPU and the right drivers installed.

Struggling with the “RuntimeError: GPU is required to quantize or run quantize model.” error? Discover why a GPU is crucial for your machine learning tasks and learn how to fix this issue with our easy-to-follow guide. We’ll walk you through the steps to set up your GPU and get your models running smoothly.

What Does The Error “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.” Mean?

The error “RuntimeError: GPU is required to quantize or run quantize model.” means that the task you are trying to perform needs a Graphics Processing Unit (GPU) because it involves heavy computations.

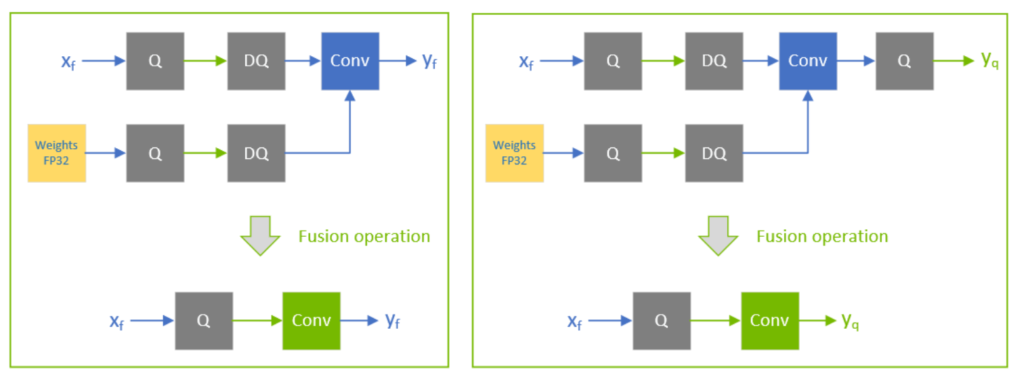

Quantization, which reduces the precision of the numbers in a machine learning model to make it faster and smaller, is a complex process that typically needs the parallel processing power of a GPU.

If you don’t have a GPU or it’s not properly set up, you’ll see this error because your computer’s CPU alone isn’t enough to handle these intensive operations efficiently.

Why Is A GPU Needed For Quantizing Models?

A GPU is needed for quantizing models for several reasons:

- High Computational Power: GPUs can perform many calculations simultaneously, making them much faster than CPUs for tasks that involve large amounts of data, like quantizing models.

- Efficient Parallel Processing: Quantization involves complex mathematical operations that can be done in parallel. GPUs are designed to handle such parallel tasks efficiently.

- Speed: Quantizing a model on a CPU can be very slow, especially for large models. GPUs significantly speed up the process, saving time and resources.

- Better Performance: Quantized models often need to be tested and fine-tuned, processes that benefit from the speed and efficiency of a GPU.

- Framework Requirements: Some machine learning frameworks, like PyTorch and TensorFlow, have specific functions for quantization that are optimized for GPU usage.

- Memory Bandwidth: GPUs typically have higher memory bandwidth than CPUs, allowing them to handle large datasets and model parameters more effectively during quantization.

- Enhanced Precision Handling: GPUs are better at handling the precision and rounding required during the quantization process, ensuring more accurate results.

Also Read: Why Is My Gpu Underperforming – Comprehensive Guide Of 2024

How Can I Check If I Have A GPU Available To Fix The “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.” Error?

To check if you have a GPU available on your system, follow these simple steps:

Open a Terminal or Command Prompt:

On Windows, you can open Command Prompt by searching for “cmd” in the Start menu. On macOS or Linux, open the Terminal application.

Check for NVIDIA GPUs:

Type the command nvidia-smi and press Enter. This command checks for NVIDIA GPUs and provides information about their status. If you see a table with details about your GPU, it means your system has an NVIDIA GPU installed and it’s working correctly.If you get an error or no output, it means your system either doesn’t have an NVIDIA GPU or the drivers are not installed correctly.

Check for Other GPUs:

For other types of GPUs (like AMD), you might need to use specific commands or software provided by the GPU manufacturer. For example, on Linux, you can use lspci | grep -i vga to list all video devices.

Which GPU Drivers Should I Install To Resolve “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.”?

To resolve the “RuntimeError: GPU is required to quantize or run quantize model.” error, you need to install the correct GPU drivers for your system. If you have an NVIDIA GPU, you should download and install the NVIDIA Graphics Drivers and the CUDA Toolkit from the NVIDIA website.

Make sure to select the driver version that matches your GPU model and operating system version. For most users, the recommended Game Ready Driver or Studio Driver versions are appropriate, and you can also find specific instructions and downloads on the NVIDIA CUDA Toolkit page.

Here’s a step-by-step guide:

1. Visit the NVIDIA Driver Download Page:

- Choose your GPU model and operating system.

- Click “Search” to find the latest drivers.

2. Download and Install the Driver:

Download the latest driver version and follow the on-screen instructions to install it.

3. Download and Install the CUDA Toolkit:

- Go to the CUDA Toolkit page.

- Choose the version that matches your operating system and download it.

- Follow thNVIDIA Driver Download installation instructions provided.

Also Read: Is 70c Safe For GPU – Read Our Latest 2024 Guide!

How Do I Set Up My Environment Variables To Fix The “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.” Error?

To fix the “RuntimeError: GPU is required to quantize or run quantize model.” error, you need to set up your environment variables so that your system knows to use the GPU for calculations. Start by opening a terminal or command prompt on your computer.

Then, set the `CUDA_VISIBLE_DEVICES` environment variable to specify which GPU you want to use. For example, if you have one GPU, you would type `export CUDA_VISIBLE_DEVICES=0` on macOS or Linux, or `set CUDA_VISIBLE_DEVICES=0` on Windows. This tells your machine learning framework to use the first GPU (index 0).

After setting this variable, you may need to restart your terminal or command prompt for the changes to take effect. By doing this, you make sure that the GPU is available for quantizing or running models, which helps resolve the error.

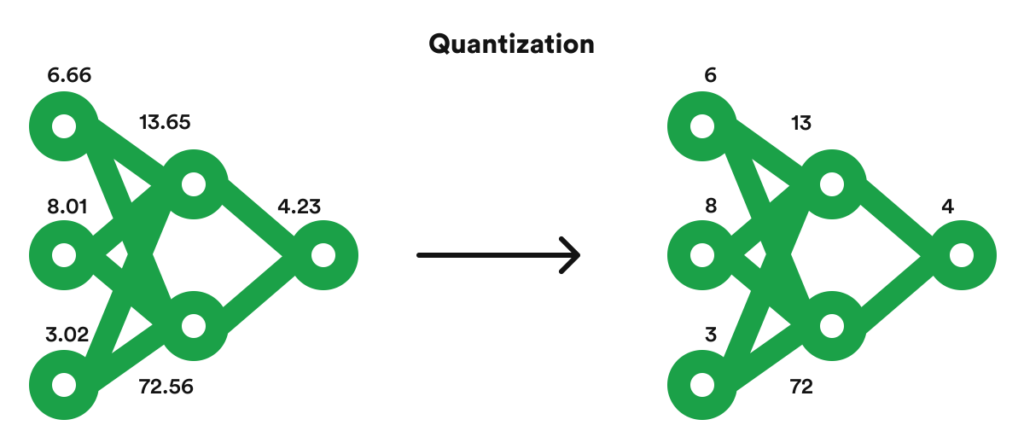

What Is Quantization In Machine Learning?

Quantization in machine learning is a technique used to make models smaller and faster by reducing the precision of the numbers used in the model. In simple terms, it involves converting the model’s weights and calculations from high-precision formats like 32-bit floating-point numbers to lower-precision formats like 8-bit integers.

By making the model’s operations less complex and requiring less memory, quantization speeds up performance and reduces the amount of power the model uses, all while aiming to keep the model’s accuracy as close as possible to the original version.

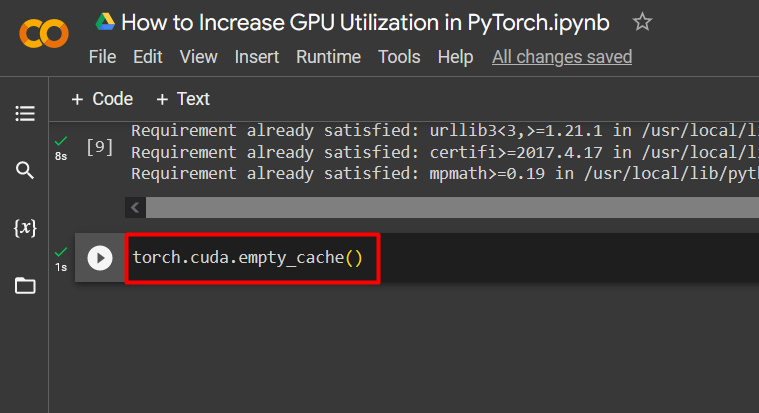

What Code Changes Are Needed To Use A GPU In Pytorch?

To use a GPU in PyTorch, you need to make a few straightforward changes to your code. First, check if a GPU is available using `torch.cuda.is_available()`. If it is, move your model and data to the GPU by appending `.to(‘cuda’)` to them.

For example, if you have a model named `model` and an input tensor named `input_tensor`, you would write `model = model.to(‘cuda’)` and `input_tensor = input_tensor.to(‘cuda’)`. When you perform operations, ensure that both the model and the data are on the GPU.

These changes tell PyTorch to use the GPU for computations, which can significantly speed up training and inference. If a GPU isn’t available, you can still run your code on the CPU by checking the availability and conditionally moving your model and data to the appropriate device.

Also Read: What Should GPU Usage Be When Gaming – GPU Usage 2024!

Can I Use Cloud Services To Resolve “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.”?

Yes, you can use cloud services to resolve the “RuntimeError: GPU is required to quantize or run quantize model.” error. Cloud platforms like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure offer virtual machines with powerful GPUs.

These services allow you to rent GPU resources, so you don’t need to have a physical GPU on your local machine. By using these cloud services, you can easily set up an environment with the necessary GPU capabilities to perform tasks like quantizing models or running quantized models.

This approach is especially useful if you only need GPU power occasionally or don’t want to invest in expensive hardware.

Also Read: How Long Does GPU Last – Extend GPU Lifespan 2024!

FAQs:

1. When Do You Get The “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.” Error?

You get the “RuntimeError: GPU is required to quantize or run quantize model.” error when trying to perform quantization or run a quantized model without a GPU. This happens because these tasks require the processing power of a GPU to work efficiently.

2. What Should I Do If My System Doesn’t Have A GPU?

If your system doesn’t have a GPU, you can either install a compatible GPU or use cloud services like AWS, Google Cloud, or Azure to access virtual machines with GPU capabilities.

3. How Do I Move A Tensorflow Model To A GPU?

To move a TensorFlow model to a GPU, use `with tf.device(‘/GPU:0’)` to specify the GPU device in your code. Ensure TensorFlow is installed with GPU support by using `pip install tensorflow-gpu`.

4. Can The “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.” Error Occur In Tensorflow?

Yes, the “RuntimeError: GPU is required to quantize or run quantize model.” error can occur in TensorFlow if the task you’re trying to perform requires a GPU and your system doesn’t have one or it’s not properly configured. This is similar to other frameworks like PyTorch.

5. What Version Of Pytorch Should I Install To Avoid The “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.” Error?

To avoid the “RuntimeError: GPU is required to quantize or run quantize model.” error, install the GPU-supported version of PyTorch by running `pip install torch torchvision torchaudio –extra-index-url https://download.pytorch.org/whl/cu113`. This ensures your setup includes CUDA support for GPU usage.

6. Is There A Way To Test If My GPU Setup Is Correct?

Yes, you can test your GPU setup by running the `nvidia-smi` command in your terminal to check if the GPU is recognized. You can also run a small PyTorch or TensorFlow program to verify that computations are being performed on the GPU.

7. What Does CUDA_VISIBLE_DEVICES Do?

`CUDA_VISIBLE_DEVICES` is an environment variable that tells your system which GPU(s) to use. By setting it, you can specify which GPUs are visible to your programs, helping to manage GPU resources effectively.

8. Can Outdated Gpu Drivers Cause The “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model.” Error?

Yes, outdated GPU drivers can cause the “RuntimeError: GPU is required to quantize or run quantize model.” error because they may not support the necessary operations for quantization. Updating your GPU drivers can resolve this issue by ensuring compatibility.

9. Why Do Some Functions In Pytorch Require A GPU?

Some functions in PyTorch require a GPU because they involve complex calculations that need fast, parallel processing to run efficiently. A GPU speeds up these operations, making training and running models much faster.

10. Can the “Runtimeerror: GPU Is Required To Quantize Or Run Quantize Model” Error Occur In TensorFlow?

Yes, the “RuntimeError: GPU is required to quantize or run quantize model.” error can occur in TensorFlow if you try to quantize or run a quantized model without a GPU. Ensure you have a GPU and the right TensorFlow version to fix this issue.

Final Words:

The “RuntimeError: GPU is required to quantize or run quantize model.” error occurs because quantization tasks need a GPU for efficient processing. Ensuring you have a GPU installed and properly configured will help you fix this error and improve your model’s performance.

Related Posts:

- How Hot Is Too Hot For GPU – Avoid Overheating 2024!

- Why Is My GPU Temp So High At Idle – Complete Guide 2024!

- Red Light On Gpu When Pc Is Off – Don’t Panic, Check This Now!

- Is World Of Warcraft Cpu Or Gpu Intensive – Ready To Boost WOW Gameplay!

James George is a GPU expert with 5 years of experience in GPU repair. On Techy Cores, he shares practical tips, guides, and troubleshooting advice to help you keep your GPU in top shape. Whether you’re a beginner or a seasoned tech enthusiast, James’s expertise will help you understand and fix your GPU issues easily.